|

|||

|---|---|---|---|

|

|

|||

3D NEURAL BEAMFORMING FOR MULTI-CHANNEL SPEECH SEPARATION AGAINST LOCATION UNCERTAINTY

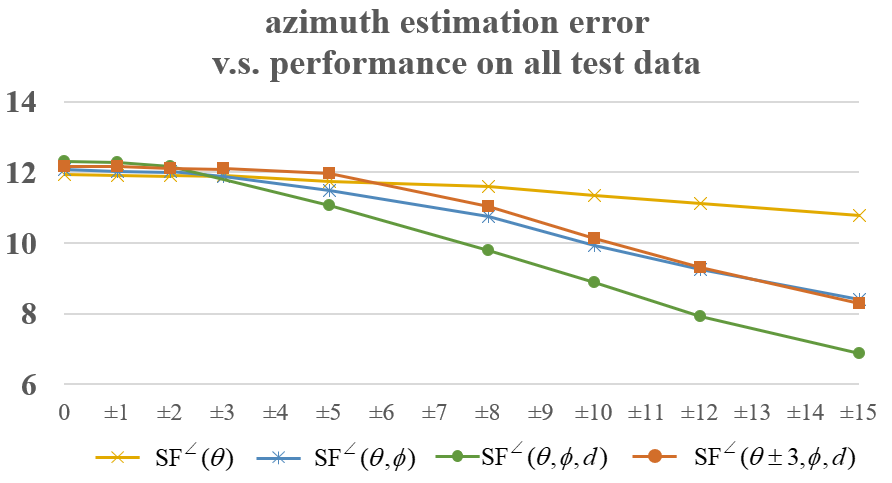

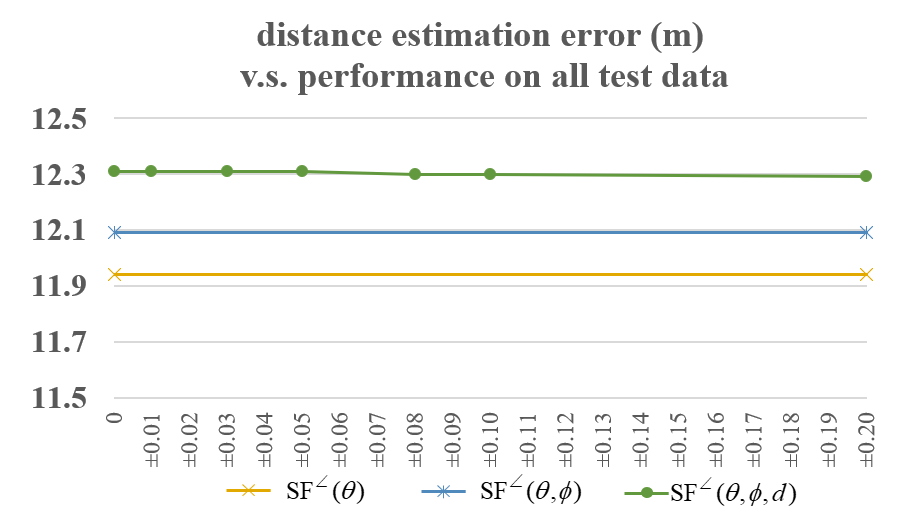

Abstract: Multi-channel speech separation using speaker’s directional information has demonstrated significant gains over blind separation. However, it has two limitations. First, substantial performance degradation is observed when the coming directions of two sounds are close. Second, the result highly relies on the precise estimation of the speaker’s direction. To overcome these issues, this paper proposed 3D features and anassociated 3D neural beamformer for speech separation. Previous works in this area are extended in two important directions. First, the traditional 1D directional beam patterns are generalized to 3D. This enables to extract speech in any target region in the 3D space. Thus, speakers in the same direction but with different elevations or distances become separable. Second, to handle the speaker location uncertainty, previously proposed spatial feature is extended to a new 3D region feature. The proposed feature and model are evaluated under an in-car scenario. Experimental results demonstrated that the proposed 3D region feature and 3D beamformer can achieve comparable performance to that with ground truth speaker location input.